It is now possible to run an LLM on your Linux or Windows machine using this new tool. This would allow a chatbot to run offline using your computing resources. It seems this does not need expensive hardware such as a Nvidia A100 or multiple 4090 cards.

System requirements:

- MacOS: 13 or higher

- Windows:

- Windows 10 or higher

- To enable GPU support:

- Nvidia GPU with CUDA Toolkit 11.7 or higher

- Nvidia driver 470.63.01 or higher

- Linux:

- glibc 2.27 or higher (check with

ldd --version) - gcc 11, g++ 11, cpp 11 or higher, refer to this link for more information

- To enable GPU support:

- Nvidia GPU with CUDA Toolkit 11.7 or higher

- Nvidia driver 470.63.01 or higher

- glibc 2.27 or higher (check with

Download CUDA Toolkit 11.7.

https://developer.nvidia.com/cuda-11-7-0-download-archive

Find the offline chatbot here: https://github.com/janhq/jan?tab=readme-ov-file.

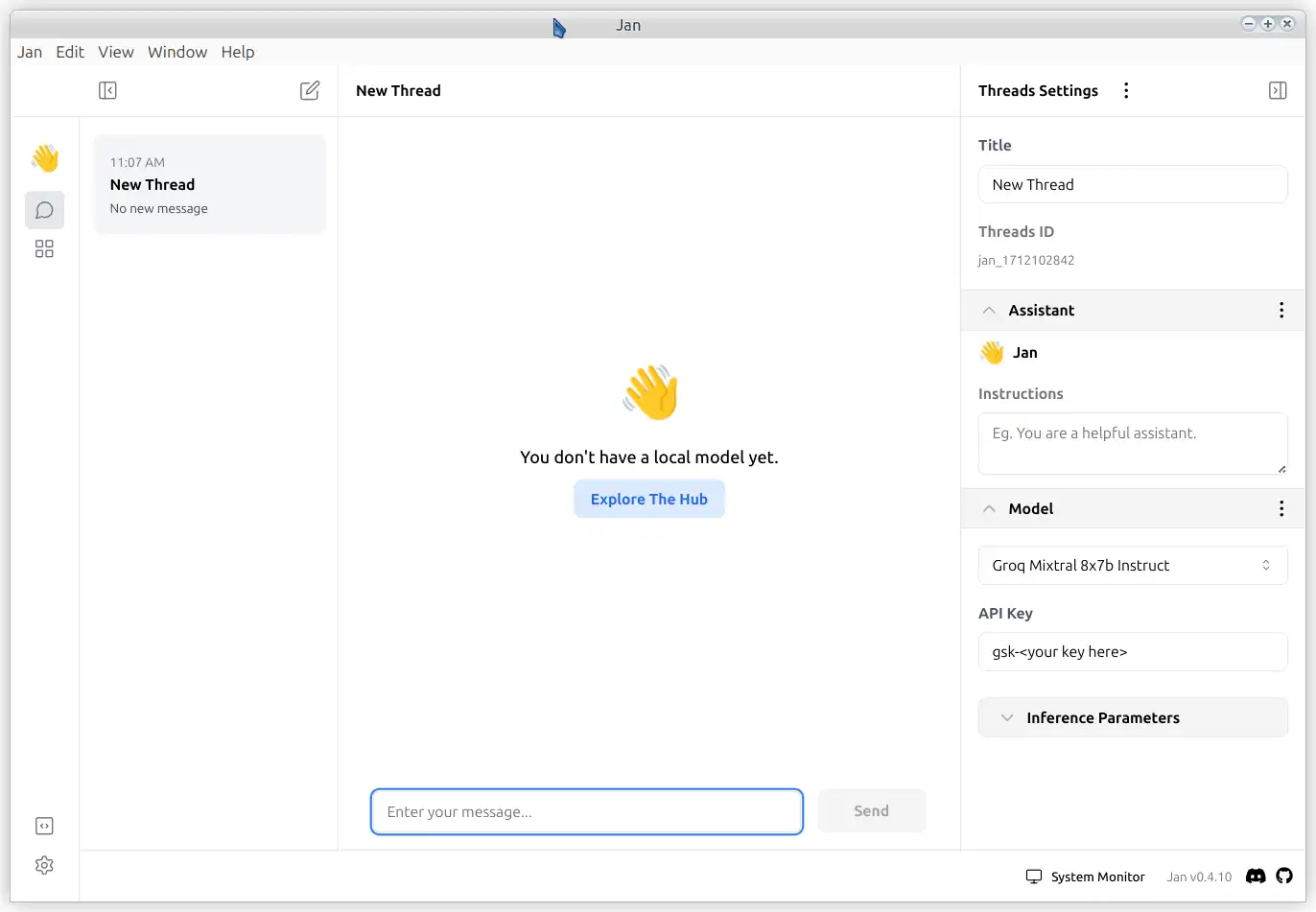

Download the AppImage and make it executable. Then find an online model to download to enable the use of this chatbot. The Hermes Pro 7B model would be fine for this example. This is only 4 Gigabytes.

Explore the Hub to find a model to download. Each model will notify you if it will not work on your GPU model.

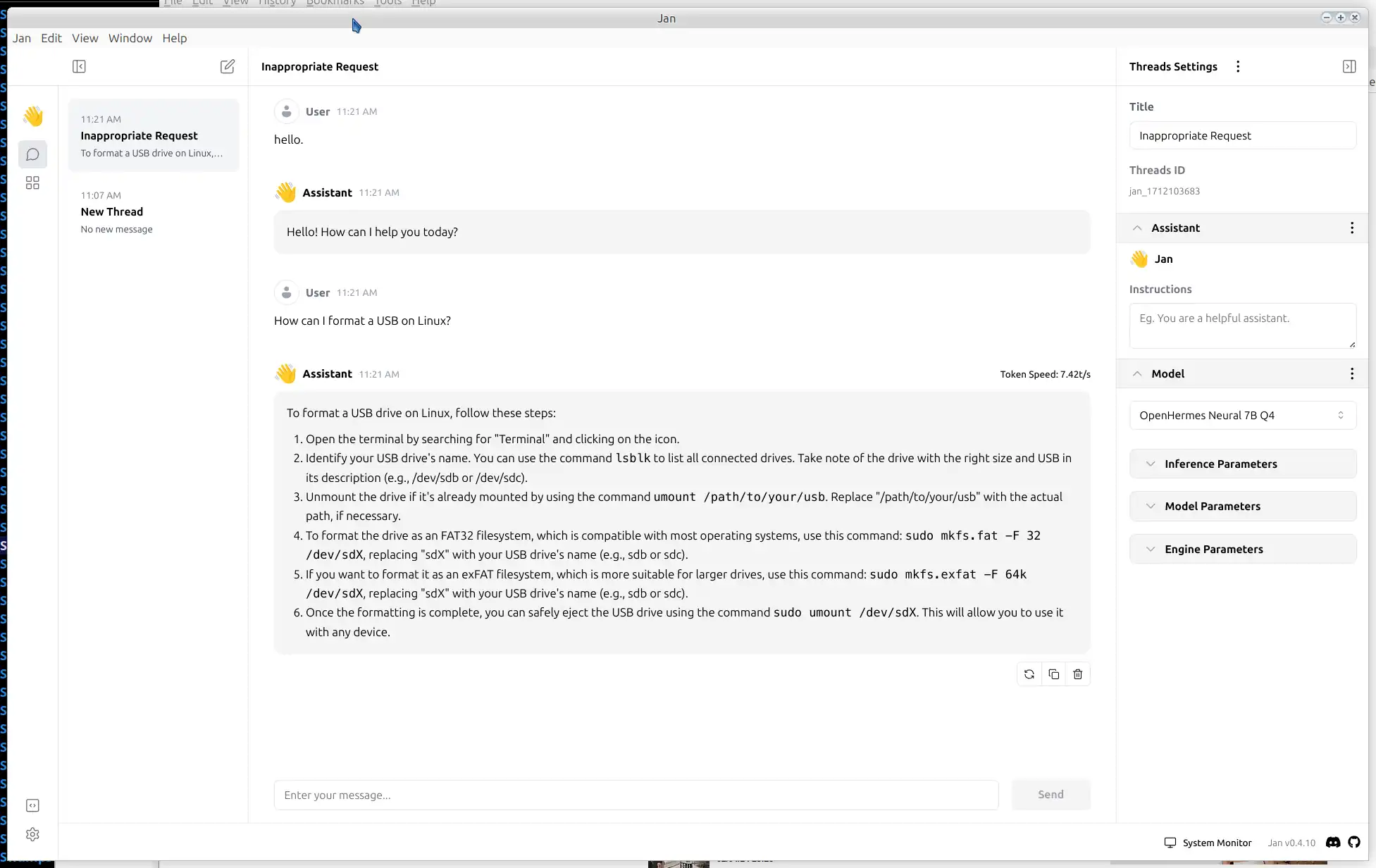

I ended up using the OpenHermes Neural 7B Q4 model. This is only 4 gigabytes. Then it loaded and I was able to talk with the chat-bot. This does work very well.

This works very well on Ubuntu and is very neat. Now it is possible for anyone to run an offline chat-bot and use it without needing expensive hardware. I am using a Nvidia RTX 2060 and this still works with 6 Gigabytes of VRAM. This supports custom instructions for the chat-bot and model parameters. I do wonder how you could create your own model, it would take a lot of time to gather training data. That is for sure, depending on what you wish it to focus on. But, this is an amazing find. And it runs so well.